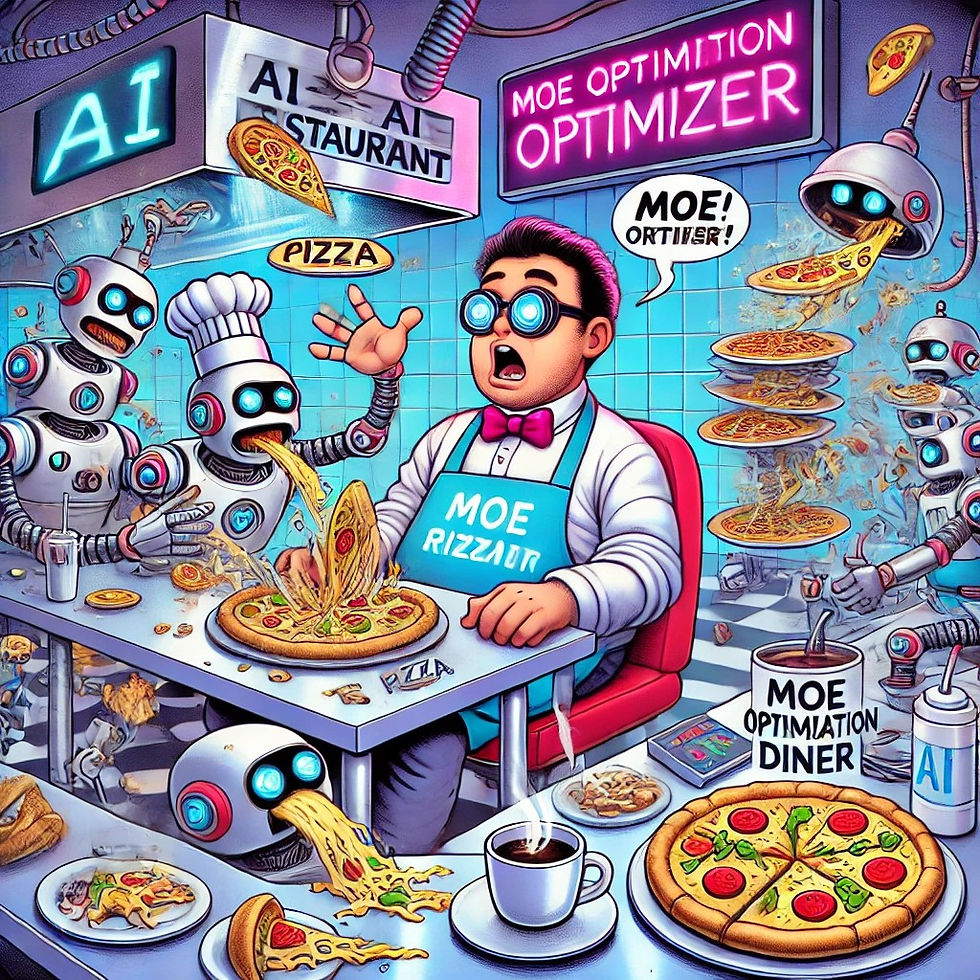

🚀 DeepSeek-V3: The AI Restaurant That Serves Up MoE Magic 🍕🤖

- Alan Lučić

- Jan 29, 2025

- 2 min read

The AI world is feasting on DeepSeek-V3, an open-source Mixture-of-Experts (MoE) LLM that boasts 671B parameters—but only 37B activate per token, making it both powerful and efficient. It’s a GPT-4-level contender trained on 14.8 trillion tokens, yet costs just $5.57M to train (a fraction of GPT-4’s rumored budget).

Sounds revolutionary, right? But let’s put it in restaurant terms—because MoE optimization is just like running a chaotic kitchen. 🍽️👨🍳

🔥 The AI Kitchen: How MoE Works (and Sometimes Fails)

DeepSeek-V3 doesn’t use all 671 chefs (parameters) at once—only 37 specialized experts are called upon for each task. This boosts efficiency, but brings its own set of headaches.

🔪 Chef Selection Problem (Routing)

If a guest orders sushi, you want the Japanese chefs to cook it, not the Italians! 🍣🍕

DeepSeek-V3 uses Multi-Head Latent Attention (MLA) to assign the right "experts" for each query.

🍕 Overworked vs. Lazy Chefs (Load Balancing Issue)

If everyone orders pizza, Italian chefs burn out while French and Chinese chefs twiddle their thumbs.

Auxiliary-Loss-Free Load Balancing helps spread the workload evenly among experts.

⏳ Fast Food vs. Fine Dining (Inference Optimization)

Nobody likes waiting forever for their order. DeepSeek-V3 uses DualPipe pipeline parallelism to reduce communication overhead, cutting down latency and energy costs.

It also switches to FP8 mixed precision for memory efficiency, helping inference scale without draining GPUs.

🤨 But Is MoE a Michelin-Starred Model or Just Another Gimmick?

✅ Benchmark Beast: DeepSeek-V3 dominates MMLU, GPQA, Codeforces, and SWE-Bench, beating most open-source rivals.⚠️ Real-World Test? Great scores, but can it handle messy real-world use cases beyond benchmarks?⚠️ Scalability Struggles: Training was cheap, but MoE inference is hardware-intensive, making deployment complex.⚠️ MoE Models Are Unpredictable: Some experts get overloaded, some barely work, causing efficiency issues at scale.

🚀 The Verdict?

DeepSeek-V3 is a huge leap for open-source LLMs, bringing state-of-the-art cost efficiency and inference speed optimizations. But can MoE truly scale to production-level AI services, or will it remain a cool-but-clunky experiment?

💬 What do you think? Is MoE the future, or just a fancy trick with too many moving parts? Let’s talk! 👇🍽️🤖

Comments